Part of the Learning to See series:

- Learning to See (<- info here)

- True Colors

- Gloomy Sunday

- We are made of star dust

- Learning to Dream

- Learning to Dream: Supergan!

- Learning to See: Hello, World!

- Dirty Data

- “Learning to See” studies

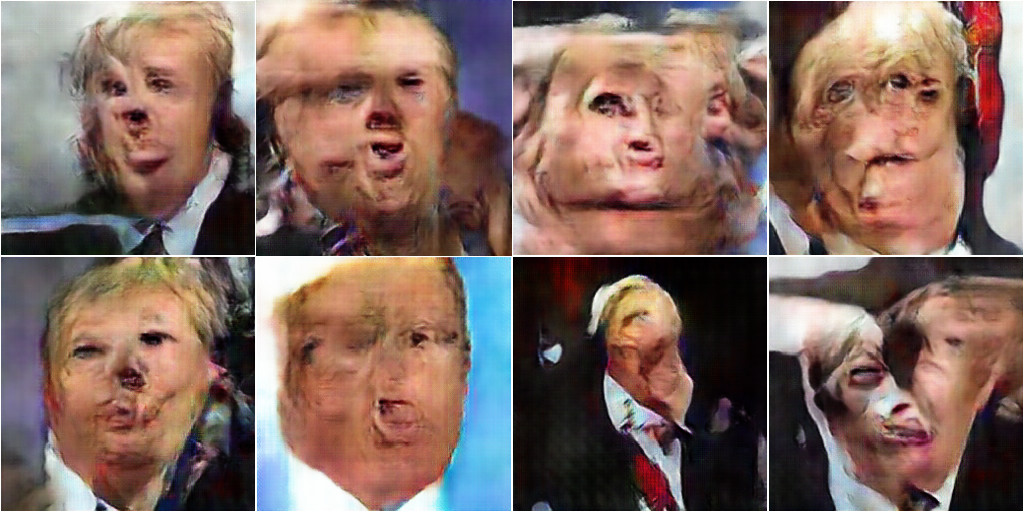

Traditionally when working in machine learning – especially with generative models, it’s common to ‘sanitise’ one’s data: to clean it, check for abnormalities or outliers, preprocess it etc. E.g. if training on faces, one might go through all of the raw data and make sure each image is indeed of a face, and is of good enough quality, lighting conditions are within acceptable variances etc. And if so, crop all images in the same way around the face, maybe even try to line them up so the main features are aligned etc. This is all *before* the data goes into the neural network. The ‘best’ (i.e. most ‘realistic’) results from generative deep models tends to be when the input data is preprocessed in this manner.

I was curious, what happens when you use ‘dirty’ data? Does the network learn anything? If so, what does it learn? And most crucially: is there anything interesting we can get out of it?

So here I train the network on ‘Dirty Data’: images scraped from Google image search, of Donald Trump, Theresa May, Nigel Farage, Marine Le Pen, Recep Tayyip Erdogan and Vladimir Putin. Not in anyway cleaned, cropped, filtered, aligned or sanitised. Not even checked if the images do indeed contain the subjects, or anyone else, other objects, scenes etc. Anything remotely related as deemed by Google image search is dumped into the magic cauldron as is.

This is also very much inspired by Brian Yuzna’s 80s horror classic Society (SFW wikipedia link) – a grotesque satire on non-egalitarian societal structures, relating to tensions between the elite and working classes. You can find images and clips on Google and Youtube, but be warned: very grotesque, NSFW and you won’t be able to unsee what you have seen (though with an 80s comedy-horror twist).

Acknowledgements

Created during my PhD at Goldsmiths, funded by EPSRC UK.