2018. Medium: Multi-channel large-scale video and sound installation. 3, 5 or 7 channel 4K video. Stereo audio. Duration: 60 minute seamless loop. Technique: Custom software, generative video, generative audio, Artificial Intelligence, Machine Learning, Generative Adversarial Networks, Variational Autoencoders.

Editions: 3+2AP

Collections: Guy & Myriam Ullens Foundation

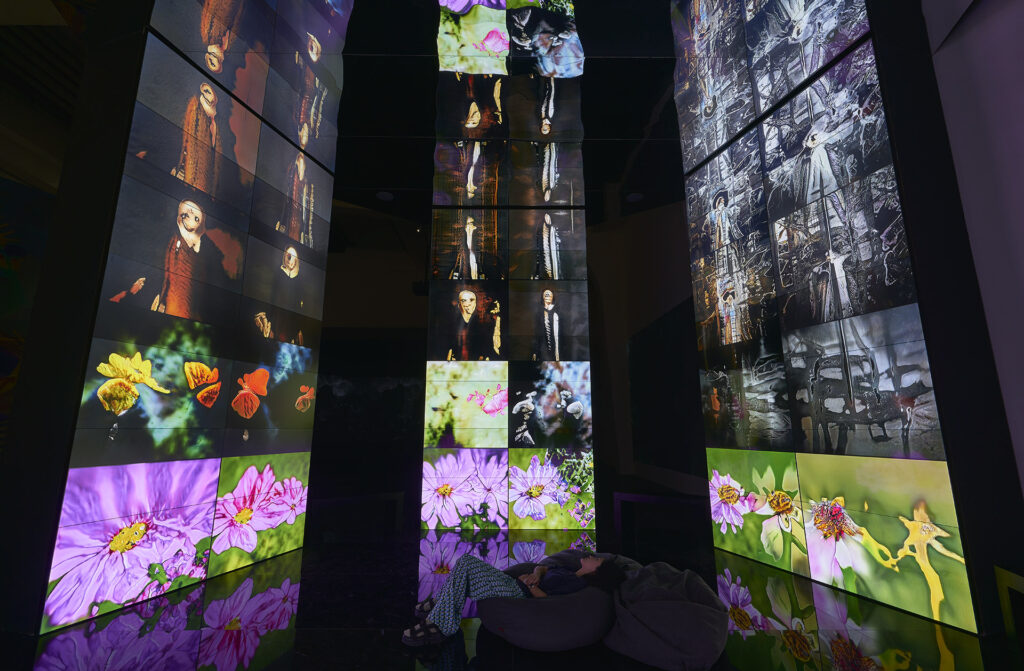

There are three options for presenting the work (see below for examples):

1. 3 channel landscape: 3x projections or LED screens, each with aspect ratio 2:1, minimum size 4m x 2m.

2. 5 channel monolith: 5x vertical LED screens, each with aspect ratio 1:2, minimum size 2.5m x 5m.

3. 7 channel screen: 7x vertically mounted screens, sizes 55″-85″.

“It feels like the machine is trying to tell me something…” – audience member

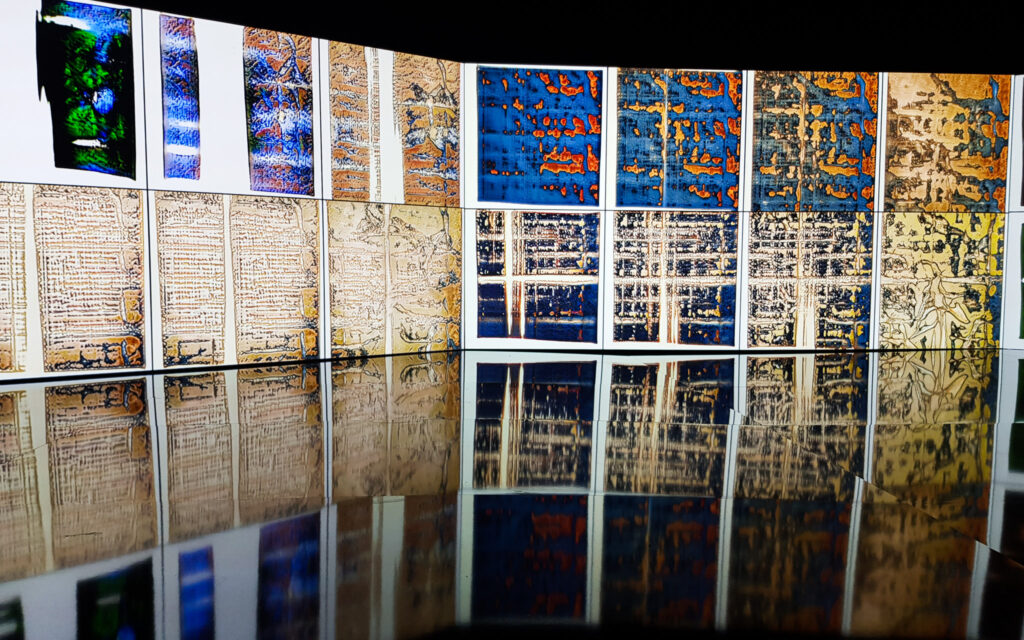

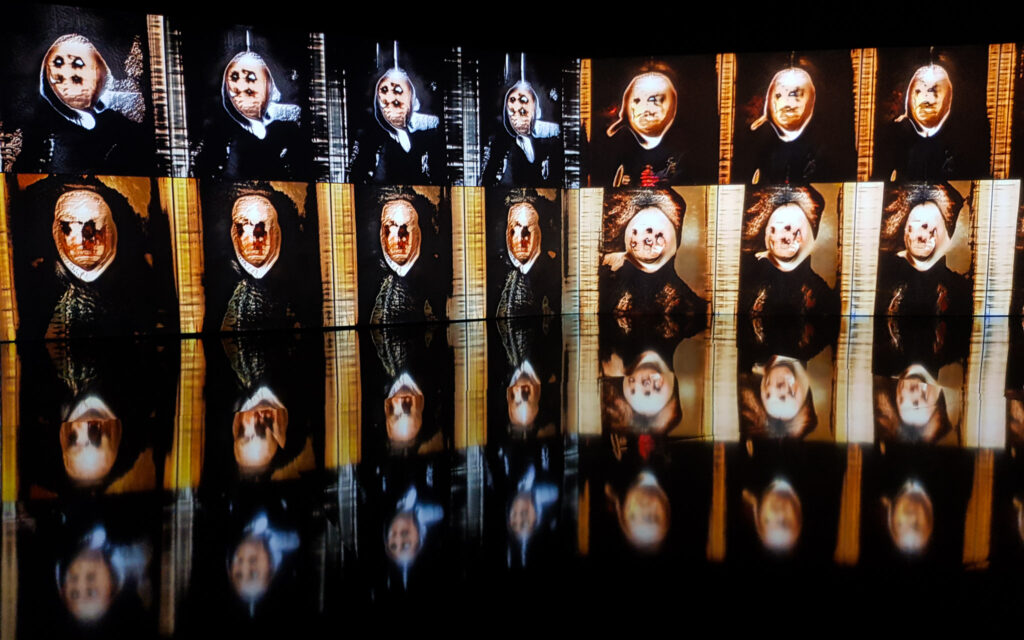

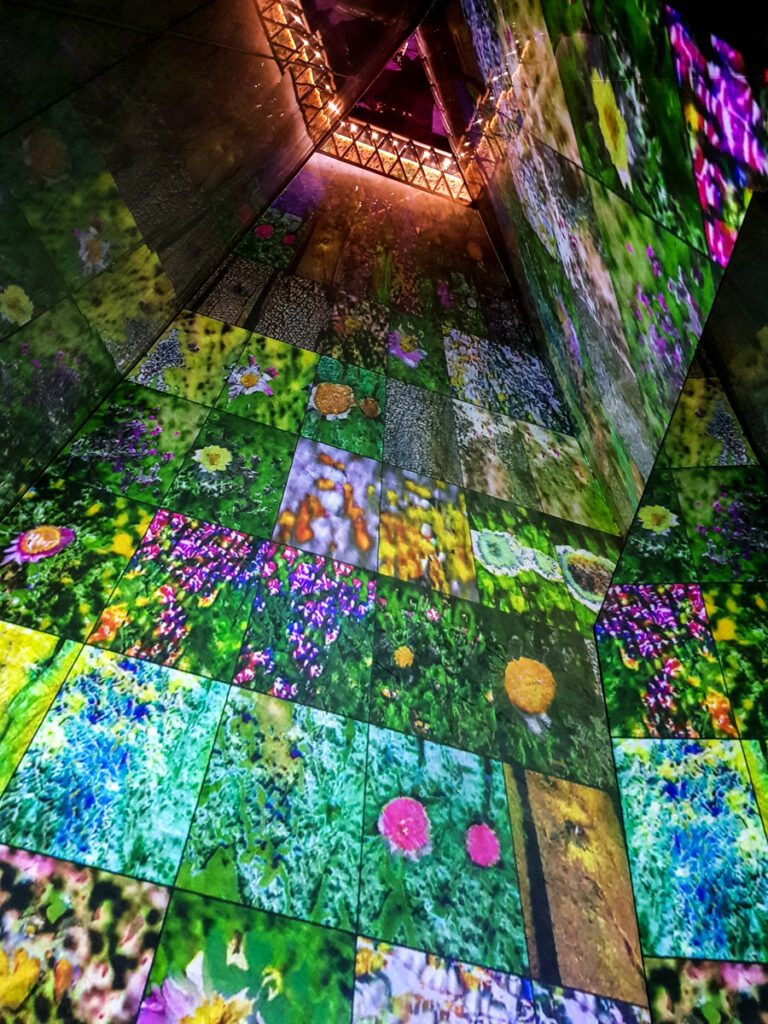

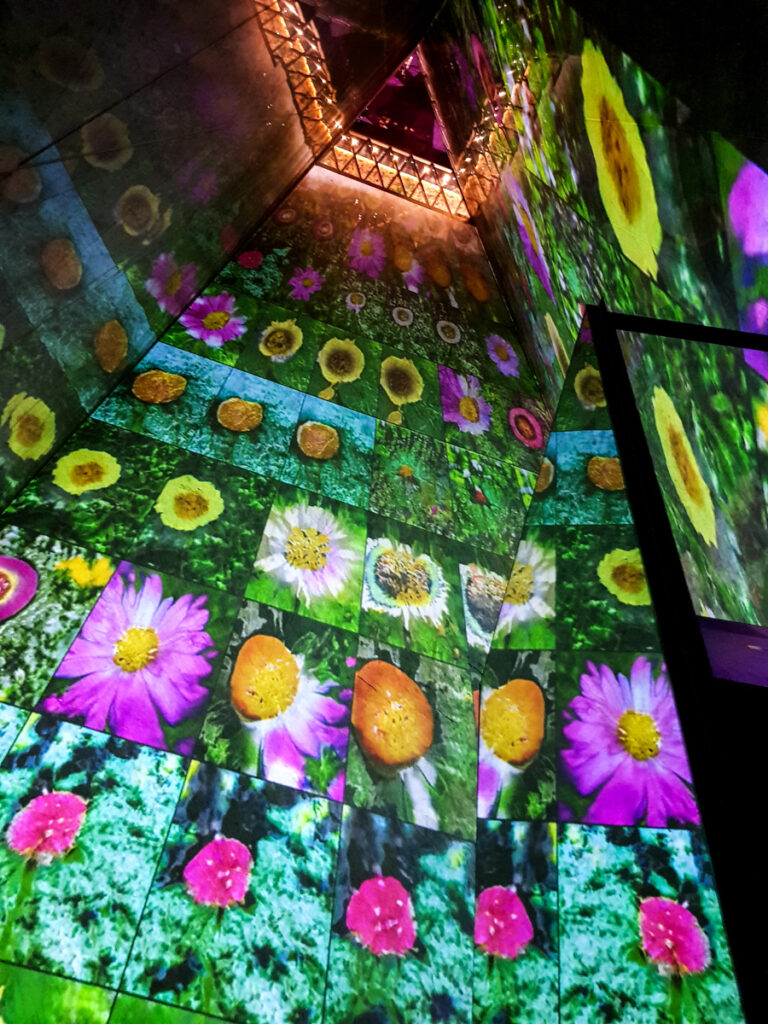

Deep Meditations: A brief history of almost everything in 60 minutes is a large-scale video and sound installation; a multi-channel, one hour abstract film; and a monument that celebrates life, nature, the universe and our subjective experience of it. The work invites us on a spiritual journey through slow, meditative, continuously evolving images and sounds, told through the imagination of a deep artificial neural network.

We are invited to acknowledge and appreciate the role we play as humans as part of a complex ecosystem heavily dependent on the balanced co-existence of many components. The work embraces and celebrates the interconnectedness of all human, non-human, living and non living things across many scales of time and space – from microbes to galaxies.

This is a landmark early Generative AI film, created with meaningful human control over the narrative (as opposed to the more typical images randomly morphing between each other). Arguably the world’s first film constructed in and told entirely through the latent space of a Deep Neural Network, with meaningful human control over narrative. To create this piece with the desired narrative, I developed a bespoke system allowing me to construct precise journeys in the high dimensional latent space of a generative model. Constructing dozens of paths swirling around each other, distributed across dozens of screens in an immersive space, the abstract narrative takes us through the birth of the cosmos, formation of the planets and earth, rocks, and seas, the spark of life, evolution, diversity, geological changes, formation of ecosystems, the birth of humanity, civilization, settlements, culture, history, war, art, ritual, worship, religion, science, technology.

Conceptually, the piece is an exploration of how we make meaning, and what we consider to be important. What does love look like? What does faith look like? Or ritual? worship? What does God look like? Could we teach a machine about these very abstract, subjectively human concepts? What would it tell us about what we hold sacred, in return? As these ideas have no clearly defined, objective visual representations, I trained an artificial neural network instead on our subjective experiences of them. Specifically, I downloaded hundreds of thousands of images from the photo sharing website Flickr, tagged with these words (and many more) to train a generative neural network.

The artwork has been exhibited worldwide and is in the collection of Guy & Myriam Ullens Foundation, while I presented the underlying research at NIPS (now NeurIPS), one of the most prestigious academic conferences in AI, and discuss in depth in my PhD Thesis.